(Crossposted from the CERT.at blog)

Back in January 2024, I was asked by the Belgian EU Presidency to moderate a panel during their high-level conference on cyber security in Brussels. The topic was the relationship between cyber security and law enforcement: how do CSIRTs and the police / public prosecutors cooperate, what works here and where are the fault lines in this collaboration. As the moderator, I wasn’t in the position to really present my own view on some of the issues, so I’m using this blogpost to document my thinking regarding the CSIRT/LE division of labour. From that starting point, this text kind of turned into a rant on what’s wrong with IT Security.

When I got the assignment, I recalled a report I had read years ago: “Measuring the Cost of Cybercrime” by Ross Anderson et al from 2012. In it, the authors try to estimate the effects of criminal actors on the whole economy: what are the direct losses and what are costs of the defensive measures put in place to defend against the threat. The numbers were huge back then, and as various speakers during the conference mentioned: the numbers have kept rising and rising and the figures for 2024 have reached obscene levels. Anderson et al write in their conclusions: “The straightforward conclusion to draw on the basis of the comparative figures collected in this study is that we should perhaps spend less in anticipation of computer crime (on antivirus, firewalls etc.) but we should certainly spend an awful lot more on catching and punishing the perpetrators.”

Over the last years, the EU has proposed and enacted a number of legal acts that focus on the prevention, detection, and response to cybersecurity threats. Following the original NIS directive from 2016, we are now in the process of transposing and thus implementing the NIS 2 directive with its expanded scope and security requirements. This imposes a significant burden on huge numbers of “essential” and “important entities” which have to heavily invest in their cybersecurity defences. I failed to find a figure in Euros for this, only the estimate of the EU Commission that entities new to the NIS game will have to increase their IT security budget by 22 percent, whereas the NIS1 “operators of essential services” will have to add 12 percent on their current spending levels. And this isn’t simply CAPEX, there is a huge impact on the operational expenses, including manpower and effects on the flexibility of the entity.

This all adds up to a huge cost for companies and other organisations.

What is happening here? We would never ever tolerate that kind of security environment in the physical world, so why do we allow it to happen online?

The physical world

So, let’s look at playing field in the physical environment and see how the security responsibilities are distributed there:

Defending against low-level crime is the responsibility of every citizen and organisation: you are supposed to lock your doors, you need to screen the people you’re allowing to enter and the physical defences need to sensible: Your office doesn’t need to be a second Fort Knox, but your fences / doors / gates / security personnel need to be adequate to your risk profile. They should be good enough to either completely thwart normal burglars or at least impose such a high risk to them (e.g., required noise and time for a break-in) that most of them are deterred from even trying.

One of the jobs of the police is to keep low-level crime from spiraling out of control. They are the backup that is called by entities noticing a crime happening. They respond to alerts raised by entities themselves, their burglar alarms and often their neighbours.

Controlling serious, especially organized crime is clearly the responsibility of law enforcement. No normal entity is supposed to be able to defend itself against Al Capone style gangs armed with submachine guns. This is where even your friendly neighbourhood cop is out of his league and the specialists from the relevant branches of the security forces need to be called in. That doesn’t mean that these things never happen at all: there is organized crime in the EU, and it might take a few years before any given gang is brought under control.

Defending against physical incursions by another country is the job of the military. They have the big guns; they have the training and thus means to defend the country from outside threats. Hopefully, they provide enough deterrence that they are not needed. Additionally, your diplomats and politicians have worked to create an international environment in which no other nation even contemplates invading your country.

We can see here a clear escalation path of physical threats and how the responsibility to deal with them shifts accordingly.

The online world

Does the same apply to cyber threats? And if not, why?

The basics

The equivalent of putting a simple lock on your door is basic cyber hygiene: Firewalls, VPNs, shielding management interfaces, spam and malspam filters, a decent patch management, as well as basic security awareness training. Hopefully, this is enough to stop being a target of opportunity, where script kiddies or mass exploitation campaigns can just waltz into your network. But there is a difference: the risk of getting caught simply for trying to hack into a network is very low. Thus, these actors can just keep on trying over and over again. Additionally, this can be automated and run on a global scale.

In the real word, intrusion attempts do not scale at all. Every single case needs a criminal on site and that limits the number of tries per night and incurs a risk of being caught at each and every one of these. The result is that physical break-in attempts are rare, whereas cyber break-in attempts are so frequent that the industry has decided that “successful blocks on FW or mail-relay level per day” are no longer sensible metrics for a security solution.

And just forget about reporting these to the police. Not all intrusion attempts are actually malicious (a good part of CERT.at’s data-feeds on vulnerabilities is based on such scans), the legal treatment of such acts are unclear (especially on an international level), and the sheer mass of it overwhelms all law enforcement capabilities. Additionally, these intrusion attempts usually are cross-border, necessitating an international police collaboration. The penalties for such activities (malicious scans, sending malspam, etc.) are also often too low to qualify for international efforts.

In the physical world, the perpetrators must be present at the site of their victims. We’re not yet at the stage where thieves and burglars send remote controlled drones to break into houses and steal valuables there – unless you count the use of hired and expendable low-level criminals as such. There is thus no question about jurisdiction and the possibility of the local police to actually enforce the law. Collecting clues and evidence might not always be easy, and criminals fleeing the country before being caught is a common trope in crime literature, nevertheless there is the real possibility that the police can successfully track and then arrest the criminals.

The global nature of the Internet changes all this. As the saying goes: there is no geography on the Internet, everyone is a direct neighbour to everybody else. Just as any simple website is open to visitors from all over the world, it can be targeted by criminals from all over the globe. There is no need for the evil hackers to be on the same continent as their targets, let alone in the same jurisdiction. Thus, even if the police can collect all the necessary evidence to identify the perpetrators, it cannot just grab them off the street – they might be far out of reach of the local law enforcement.

And another point is different: usually, physical security measures are quite static. There is no monthly patch-day for your doors. I can’t recall any situation where a vendor of safes or locks had to issue an alert to all customers that they have to upgrade to new cylinders because a critical vulnerability was found in the current version (although watching LPL videos are a good argument that they should start doing that). Recent reports on vulnerabilities of keyless fobs for unlocking of cars show that the lines are starting to blur between these worlds.

Organized crime

What about serious, organized crime? The online equivalent to a mob boss is a “Ransomware as a Service (RaaS)” group: they provide the firepower, they create an efficient ecosystem of crime and they make it easier for low-level miscreants to start their criminal careers. Examples are Locky, REvil, DarkSide, LockBit, Cerber, etc. Yes, sometimes law-enforcement, through long-running, and international collaborations between law-enforcement agencies, is able to crack down on larger crime syndicates. Those take-downs vary in their effectiveness. In some cases, the police manages to get hold of the masterminds, but often enough they just get lower or mid-level people and some of the technical infrastructure, leading just to a temporary reprieve for the victims of the RaaS shop.

Two major impediments to the effectiveness of these investigations are the global nature of such gangs and thus the need for truly global LE collaboration and the ready availability of compromised systems to abuse and malicious ISPs who don’t police their own customers. Any country whose police force is not cooperating effectively creates a safe refuge for the criminals. The current geo-political climate is not helpful at all. Right now, there simply is no incentive for the Russian law enforcement to help their western colleagues by arresting Russian gangs targeting EU or US entities. Bullet-proof hosters are similar, they rent the infrastructure to criminals from which to launch attacks from. And often enough the perpetrators simply use the infrastructure of one of their victims to attack the next.

The end result is that serious cybercrime is rampant. Companies and other organisations must defend themselves against well-financed, experienced, and capable threat-actors. As it is, law enforcement is not capable to lower the threat level low enough to take that responsibility away from the operators.

Nation states

The next escalation step are the nation state attackers. They come in (at least) two types: Espionage and Disruption.

Espionage is nothing new; the employment of spies traces back to antique world. But just as with cybercrime, in the new online world it is no longer necessary to send agents on dangerous missions into foreign countries. No, a modern spy has a 9 to 5 desk job in drab office building where the highest risk to his personal safety is a herniated vertebral disc caused by unergonomic desks and chairs.

It’s been rare, but cyber-attacks with the aim of causing real world disruptions have appeared over the last ten years, especially in the Russia/Ukraine context. The impact can be similar to Ransomware: the IT systems are disabled and all the processes supported by those system will fail. The main difference is that you can’t simply buy your way out of a state-sponsored disruptive attack. There have been cases where the attackers try to inflict physical damage to either the IT systems (bricking of pcs in the Aramco attack) or machinery controlled by industrial control systems.

This is a frustrating situation. We’re in a defensive mode, trying to block and thwart attack after attack from well resourced adversaries. As the recent history shows, we are not winning this fight – cybercrime is rampant and state-sponsored APTs are running amok. Even if one organisation manages to secure its own network, the tight interconnectedness with and dependency of others will leave it exposed to supply chain risks.

What can we do about this?

Such a situation reminds me of the old proverb: “if you can’t win the game, change the rules”. I simply do not see a simple technical solution to the IT security challenge. We’ve been sold these often enough under various names (firewalls, NGFW, SIEMs, AV, EDR, SOAR, cloud-based detection, sandboxes to detected malicious e-mail, …) and while all these approaches have some value, they are fighting the symptoms, but not the cause of the problem.

There certainly are no simple solutions, and certainly none without significant downsides. I’m thus not proposing that the following ideas need to be implemented tomorrow. This article is just supposed to move the Overton Window and start a discussion outside the usual constraints.

So, what ideas can I come up with?

Really invest in Law Enforcement

The statistics show every year that cyber-crime is rising. This is followed by a ritual proclamation of the minister in charge that we will strengthen the police force tasked with prosecuting cyber-crime. The follow-through just isn’t there. Neither the police, nor the judiciary is in any way staffed to really make a dent in cybercrime as a whole.

They are fighting a defensive war, happy with every small victory they can get, but overall they are simply not staffed at a level where they really could make a difference.

Denial of safe havens

Criminals or other attackers need some infrastructure where they stage their attacks from. Why do we tolerate this? Possible avenues for a change are:

- Revisit the laws that shield ISPs from liabilities regarding misbehaving customers. This does not need to be a complete reversal, but there need to be clear and strong incentives not to allow customers to stage attacks from an ISP’s network. See below for more details.

- And on the other side, refuse to route the network blocks from ISPs who are known to tolerate criminals on their network. Back on Usenet, this was called the “UDP – Usenet Death Penalty”: when you don’t police your own users’ misbehaviour on this global discussion forum, then other sites will decide not to accept any articles from your cesspool any more.

The aim must be the end of “bulletproof” hosters. There have been prior successes in this area, but we can certainly do better on a global scale.

Don’t spare abused systems

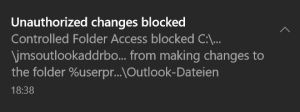

Instead of renting infrastructure from bulletproof hosting outfits, the criminals often hack into an unrelated organisation and then abuse its systems to stage attacks from. Abused systems range from simple C2 proxies on compromised websites, DDoS-amplification, accounts for sending spam-mails to elaborate networks of proxies on compromised CPEs.

These days, we politely warn the owners of the abused devices and ask them nicely to clean up their infrastructure.

We treat them as victims, and not as accomplices.

Maybe we need to adjust that approach.

Mutual assured cyber destruction

As bad as the cold war was, the concept of mutual assured destruction managed to deter the use of nuclear weapons for over 70 years. Right now, there is no functioning deterrence on the Internet.

I can’t say what we need to do here, but we must create a significant barrier to the employment of cyberattacks. Right now, most offensive cyber activities are considered “trivial offences”, maybe worth a few sternly worded statements, but nothing more. The EU Cyber Diplomacy Toolbox is a step in that direction, but is still rather harmless in its impact.

We can and should do more.

Broken Window Theory

From Wikipedia: “In criminology, the broken windows theory states that visible signs of crime, antisocial behavior, and civil disorder create an urban environment that encourages further crime and disorder, including serious crimes.”

To put this bluntly: As we haven’t managed to solve the Spam E-mail problem, why do we think we can tackle the really serious crimes?

Thus, one possible approach is to set aside some investigative resources in the law enforcement community to go after the low-level, but very visible criminals. Take for example the long running spam waves promoting ED pills. Tracking the spam source might be hard, but there is a clear money trail on the payment side. This should be an eminently solvable problem. Track those gangs down, make an example out of them and let every other criminal guess where the big LE guns will be pointing at next.

As a side effect, the criminal infrastructure providers who support both the low level and the more serious cybercrime might also feel the heat.

Offer substantial bounties

We always say that ransomware payments are fuelling the scourge. They provide RaaS gangs with fresh capital to expand their operations and it is a great incentive for further activities in that direction.

So, what about the following: decree by law that if you’re paying a ransom, then you have to pay 10% of the ransom into a bounty fund that incites operators in the ransomware gangs to turn in their accomplices.

Placing bounties on the head of criminals is a very old idea and has proven to be effective to create distrust and betrayal in criminal organisations.

Liability of Service Providers

Criminals are routinely abusing the services offered by legitimate companies to further their misdeeds. Right now, the legal environment is shielding the companies whose services are abuse, from direct liability regarding the action of their customers.

Yes, this liability is usually not absolute, often there is a “knowingly” or “repeatedly” or “right to respond to allegations” in the law that absolve the service providers to proactively search for or quickly react to reports of illegal activities originating from their customers.

We certainly can have a second look at these provisions.

Not all service providers should be treated the same way, a small ISP offering to hosts websites has vastly smaller resources to deal with abuse that the hyper-scalers with billions of Euros stock market valuations. The impact of abuse scales about the same way: a systematic problem at Google is much more relevant than anything a small regional ISP can cause.

Spending the same few percentage points of their respective revenue on countering abuse can give the abuse handling teams of big operators the necessary punch to really be on top of abuse at their platform and do it 24×7 in real-time.

We need to incentivise all actors to take care of the issue.

Search Engine Liability

By using SEO techniques or via simply buying relevant advertisement slots, criminals sometimes manage to lure people looking for legitimate free downloads to fake download sites that offer backdoored versions of the programs that the user is looking for.

Given the fact that this is a very lucrative market for search engine operators, there should be no shortage on resources to deal with this abuse either proactively or in near real time when they are reported.

And I really mean near real-time. Given e.g., Google’s search engine revenue, it is certainly possible to resolve routine complaints within 30 minutes, on a 24×7 coverage. If they are not able to do it, make them both liable for damages caused by their inaction and impose regulatory fines on them.

For smaller companies, the response time requirements can be scaled down to levels that even a mom & pop ISP can handle.

Content Delivery Network liability

The same applies to content delivery networks: such CDNs are often abused to shield criminal activities. By hiding behind a CDN, it becomes harder to take down the content at the source, it becomes tricky to just firewall off the sewers of the Internet and even simple defensive measures like blocking JavaScript execution by domain are disrupted if the CDN serves scripts from their domains.

Cloudflare boasts that a significant share of all websites is now served using their infrastructure. Still, they only commit to a 24h reaction time on abuse complaints for things like investment fraud.

With great market-share comes great responsibility.

We really need to forcibly re-adjust their priorities. It might be a feel-good move for libertarians to enable free speech, and sometimes controversial content really needs protection. But Cloudflare is acting like a polluter who doesn’t really care what damage their actions cause on others.

Even in the libertarian heaven, good behaviour is triggered by internalizing costs by making liabilities explicit.

Webhoster liability

The same applies to the actual hosters of malicious content. In the western world, we need to give webhosters a size-dependent deadline for reacting to abuse-reports. For the countries who do not manage to create and enforce similar laws, the rest of the world need to react by limiting the reachability of non-conforming hosters.

Keeping the IT market healthy

Market monopolies are bad for security. They create a uniform global attack surface and distort the security incentives. This applies both to the software, the hardware/firmware side, the cloud as well as to the ISP ecosystem.

What can the military do?

In the physical word, the military is the ultimate deterrence against nation state transgressions. This is really hard to translate to cyber-security. I mentioned MAD above. This is really tricky: what is the proper way of retaliation? How do we avoid a dangerous escalation of hack, hack-back and hack-back-back?

Or should we relish in the escalation? A colleague recently mentioned that some ransomware gang claimed to have hacked the US Federal Reserve and is threatening to publish terabytes of stolen data. I half joked by replying with “If I were them, I’d start to worry about a kinetic response by the US.”

There are precedents. Some countries are well known to react violently if someone decides to take one of their citizens as hostage. No negotiations. Only retribution with whatever painful means are available.

Some cyber-attacks have a similar impact as violent terrorist attacks, just look at the ripple on effect on hospitals in London following the attack on Synnovis. So why should our response portfolio against ransomware actors rule out some the options we keep open for terrorists?

Free and open vs. closed and secure

Overall, there seems to be two major design decision that have a major cyber security impact.

First, the Internet is a content-neutral, global packet-switched network, for which there is only a very limited consensus regarding the rules that its operators and users should adhere to. And there are even fewer global enforcement possibilities for the little rules that we can agree on.

On one hand, this is good. We do not want to live in a world where the standards for the Internet are set and enforced by oppressive regimes. The global reach of the Internet is also a net positive: it is good that there is a global communication network that interconnects all humans. Just as the phone network connects all countries, the global reach of the Internet has the potential to foster communication across borders and can bring humanity together. We want dissidents in Russia and China to be able to communicate with the outside world.

On the other hand, this leads to the effects described in the first section: geography has no meaning on the Internet; thus, we’re importing the shadiest locations of the Internet right into our living rooms.

We simply can’t have both: a global, content agnostic network that reaches everybody on the planet, and a global network where the behaviour that we find objectionable is consistently policed.

The real decision is thus where to compromise: On “global”, by e.g. declining to be reachable from the swamps of the Internet, or on “security”: live with the dangers that arise from this global connectivity.

The important part here is: this is a decision we need to take. Individually, as organisation and, perhaps, as a country.

We face a similar dilemma with our computing infrastructure: The concept of the generic computer, the open operating systems, the freedom to install third-party programs and the availability of accessible programming frameworks plus a wealth of scripting languages are essential for the speed of innovation. A closed computing environment can never be as vibrant and successful.

The ability to run arbitrary new code is both a boon for innovation, but also creates the danger of malicious code being injected into our system. Retrofitting more control here (application allowlisting, signed applications, strong application isolation, walled garden app-stores, …) can mitigate some of the issues, but will never reach the security properties of system that was designed to run exactly one application and doesn’t even contain the foundations for running additional code.

Again, there is a choice we need to make: do we prefer open systems with all their dangers, or do we try to nail things down to lower the risks? This does not need to be a global choice: we should probably choose the proper flexibility vs. security setting depending on intended use of an IT system. A developer’s box needs not have the same setting as a tablet for a nursing home resident.

Technical solutions – just don’t be easily hackable?

In an ideal world, our IT systems would be perfectly secure and would not be easy pray for cyber-criminals and nation state actors. Yes, any progress in securing our infrastructure is welcome, but we cannot simply rely on this path. Nevertheless, there are a few low hanging fruits we need to take:

Default configurations: Networked devices need to come with defaults that are reasonably secure. Don’t expect users to go through all configuration settings to secure a product that they bought. This can be handled via regulation.

Product liability is also an interesting approach. This is not trivial to get right, but certain classes of security issues are so basic that failing to protect against them amounts to gross negligence in 2024. For example, we recently saw several path traversal vulnerabilities in edge-devices sold in 2024 by security companies with more than a billion-dollar market cap. Sorry, such bugs should not happen in this league.

The Cyber Resilience Act is an attempt to address this issue. I have no clue whether it will actually work out well.

While I hope that we will manage to better design and operate our critical IT infrastructure in the future, this is not the part where I’d put my money on. We’ve been chasing that goal for the last 25 years and it hasn’t been working out so great.

We really need to start thinking outside the box.