Explicit Inversive Pseudorandom

Number Generators

Diplomarbeit

zur Erlangung des Magistergrades

an der Naturwissenschaftlichen Fakultät

der Universität Salzburg

eingereicht von

Otmar Lendl

Salzburg, im November 1996

Contents

As a rule, random number generators are fragile and need to be treated with

respect. It’s difficult to be sure that a particular generator is good without an

enormous amount of effort in the various statistical test.

The moral is: do your best to use a good generator, based on the mathematical

analysis and the experience of others; just to be sure, examine the numbers to

make sure that they “look” random; if anything goes wrong, blame the

random-number generator !

– Robert Sedgewick, in “Algorithms” (Second Edition, 1988)

I’d like to thank everybody who helped to make this thesis become reality. Peter

for his patience, trust and guidance; Charly, Hannes, Karin, and Stefan for the most

creative, helpful and entertaining working environment I have ever experienced; and

my mother for her unwavering support.

Chapter 1

Introduction

The purpose of this thesis is to discuss the implementation of the explicit inversive

congruential generator (EICG) and the properties of the resulting pseudorandom

numbers. But before we delve into the details of the implementation or the theoretical

and empirical results we will take a closer look at the basic concept of pseudo-random

numbers.

What do we mean when we talk about pseudorandom numbers (PRN) ? And for

what purpose do we devise such elaborate means to artificially generate megabytes of

digital noise ?

1.1 What do we need Pseudo-Random Numbers for ?

To the uninitiated, all this pseudo-random numbers “business” seems to have

no serious applications. Everybody will come up with computer games as a

field where pseudo-random numbers are used to make the behaviour of the

computer less predictable. Steering the movements of some on-screen monster

does not require a high standard of randomness, almost any algorithm will

suffice, provided it is easy to implement and does not cost too much computing

resources.

Another domain where we need PRN is wherever we need to model a more or less

random phenomenon of the real world. The simulation of a roulette table or other

forms of lottery might still be in the area of non-serious application, but here

the defects of the generator start to be an issue. Imagine this scenario: you

try to develop a winning strategy for blackjack and use a simulation to test

your algorithm. Any correlation between statistical defects and the strategy

will lead to a skewed result and may even change the sign of the expected

outcome. Playing that strategy in a real casino might cost you dearly. Thus it is

important to make the choice of the generator an issue even in such non-scientific

applications.

Simulation of random events is far from being limited to gambling, a significant

percentage of all simulations of natural phenomena contains a random component.

Whether that may be quantum effects, rainfall on a certain area, Brownian motion,

absorption pattern, bifurcation of tree roots, failure of technical components, solar

activity …, in all cases we know at best the statistical properties of an event. An

analytical solution of the given problem based on probabilities is often not possible.

Thus one has to resort to stochastic simulation (see [3, 48, 75]) where one calculates

the result of the overall simulation by choosing possible outcomes of the underlying

random events according to their respective probability. Doing this a number of times

should provide enough samples of the outcome to estimate the probability of each

possible result. Needless to say, the selection of the realisations of the underlying

random events is crucial to the correctness of the whole calculation. Since this selection

is done with the aid of PRN, their quality plays an important role in the whole

process.

Finding the use of PRN in stochastic simulation is not that surprising, but finding

them in algorithms for such mundane tasks like integration might need some more

explanation. Numerical integration is a common problem in a great deal of real world

problems. A big battery of algorithms (trapezoid method, Simpson’s method, spline

quadrature, adaptive quadrature, Runge-Kutta, …) was developed to minimize the

calculation costs while increasing the accuracy of the result. All these methods scale

very badly with the dimension of the integral, so a completely different approach is

more appropriate there. The Monte Carlo method (see [3, 48, 78, 84]) uses randomly

selected samples of the function to estimate the integral. Provided we know

more about the behaviour of the function (i.e. its total variation) and the

distribution of the actual samples used (as measured by their discrepancy), the

inequality of Koksma-Hlawka (See page , [51, 70]) will give an error bound for

this method. Since it is generally not possible to calculate the exact value of

the discrepancy of the random numbers used for the integration, this error

bound will only be a probabilistic one. In order to get a deterministic one, the

random numbers, which determine which samples of the function will be

evaluated, are replaced by numbers for which the order of the discrepancy

is known. This turns the Monte Carlo method into the Quasi-Monte Carlo

method.

One way to get such good point sets is to explicitly construct

them with that goal in mind (See [70] for a discussion of

-nets

and other methods.) or use a PRNG for which an upper bound on the discrepancy is

known. This is one of the reasons why we will take a close look at this quantity in

Section 3.3. Not all applications of Quasi-Monte Carlo integration are labeled

as such, as the basic algorithm can be regarded as a simple heuristic. For

example, distributed ray tracing [32, p. 788] uses a set of randomly distributed

rays to implement spatial and temporal antialiasing which amounts to an

integration over both the time-frame of the picture and the pixel’s spatial

extension.

Non-deterministic algorithms often use pseudorandom numbers, too. These

algorithms are used to tackle problems for which a deterministic solution takes too

much time. Although they cannot guarantee success, they promise to find the solution

(or a sub-optimal one) within reasonable time. Examples for this kind of algorithm are

Pollard’s rho heuristic [8, p. 844] for integer factorization, the Rabin-Miller

primality test [8, p. 839], Simulated Annealing [31], and Threshold Accepting

[11].

Other algorithms use pseudorandom numbers for a different purpose. Instead of

using them directly for solving the problem they are used to randomize the problem

(or the algorithm) in order to avoid running into the same worst-case behaviour again

and again. See [8, p. 161] for an explanation of the rationale behind randomized

quick-sort.

Some cryptographic algorithms and protocols require a good source of random

numbers, too. Stream ciphers [77, p. 168f], for example, use the output of a

PRNG (termed keystream generator) to encrypt the plaintext. The security of this

cipher depends largely on the statistical quality of the keystream. Any regularities of

the PRNG can be used by the attacker to predict the next bits and thus crack the

code. No other domain of PRNG applications has such a high demand on the

“randomness” of the generated PRN. Algorithms which are good enough for

stochastic simulations are typically way too predictable to be useful as a

keystream generator for a stream cipher. Thus the field of cryptographically

secure PRNG has amazingly little in common with the study of PRNG for

stochastic simulation on which we will focus in this thesis. Information on

cryptographically secure pseudorandom numbers can be found in [52], [82], and

[77].

Another application of pseudo-random numbers in the field of cryptology is

providing the “random” numbers needed for a variety of cryptographic protocols. A

well known example are the session keys generated for each transaction in hybrid

cryptosystems. As the recent debacle involving the Netscape Navigator [33, 69] has

shown, one must be very careful not to use a simple PRNG for this task. Since this is

more a matter of how to get the entropy needed for non-predictability than one of

analysing the properties of sequences of PRN we will not elaborate on this subject in

this thesis.

1.2 Criteria for PRN Generator Selection

Now that we know a bit about the various applications of PRN, let’s try to formulate

a few criteria for the selection of a good PRN generation algorithm. As we will see

later it is crucial for the selection of the right PRNG to keep an eye on the application

of the PRN.

1.2.1 Reproducibility

This criterion may sound strange at first sight, since reproducibility contradicts the

intuitive notion of randomness, and indeed, real random number generators are

extremely unlikely to ever repeat their output. So what are the advantages of a

generator which will produce the same sequence of pseudorandom numbers when fed

with the same parameters ? Once again, we have to turn to the application of which

the generator is a component. In the case of a stochastic simulation the benefit is

twofold:

- As a scientific experiment, it should be possible to redo the same calculations

under the same conditions. This ensures that an independent verification

of the obtained result is possible.

- Debugging and verifying the simulation program is greatly helped by the

possibility of replaying the calculation. Otherwise it may not be possible to

determine if an unexpected outcome is caused by a systematic error in the

simulation setup, or whether it is just a statistic fluke.

In some areas, for example stream ciphers, reproducibility is a key requirement for

the application. Only very few applications, most of them in the area of cryptography,

do actually benefit from the use of non-reproducible PRN.

1.2.2 Statistical properties

It is clear that when we want to simulate a random variable with a PRNG, then the

output of the generator should model as closely as possible the expected behaviour of

instances of the random variable. If a simulation of a dice generates a 7 or

strongly favors the 6 we will not accept the generator. Other deviations from the

desired behaviour, e.g. correlations, are harder to detect, and methods for

systematically testing generators for such deficiencies have been the subject of

considerable mathematical work [50, 28, 54, 85], including some parts of this

thesis.

As we will see later, proving that a generator really has all the statistical

properties a real random number generator is supposed to have, is not possible.

So all we can do is to establish faith in the generator by testing it for some

properties.

1.2.3 Empirical Test Results

Empirical testing usually involves using the PRN for a stochastic simulation with a

known result. If the computed results contradict the expected ones, the generator will

be dismissed as not suitable for that kind of stochastic simulation. A passed test will

increase the faith that this generator will yield correct results in real world

problems. We will examine the significance of empirical test results later in greater

details.

A large battery of such tests was developed over the years, from the well known

tests of Knuth [50] and Marsaglia [66] to recent additions like the weighted spectral

test [40, 41, 46, 44]. See [54, §3.5.] for further references on testing pseudorandom

number generators.

1.2.4 Possibility of Theoretical Analysis

In order to make analytical investigations possible, most modern PRNG are defined in

quite simple mathematical terms. It is a tradeoff: The simpler the algorithm, the easier

it will be to prove statements concerning the quality of the generated numbers. On the

other hand, a convoluted algorithm appeals to the intuition. History has shown

[50, 74] that quite a few people could not resist the temptation to build generators

based on doing obscure transformations on numbers stored in computers.

Empirical analysis has shown that the quality of such generators are often

abysmal.

1.2.5 Results of Theoretical Analysis

Doing empirical studies on the properties of a PRNG is always possible, but

deriving properties of the generator output by pure mathematical study has a

lot of advantages. Whereas an empirical test can only cover one specific set

of parameters of a generator, it is sometime possible to make analytically

proven statements on the properties of PRN generated by a certain generator

regardless of the parameters used. In the same vein, an empirical test on a specific

part of the generator’s output, say the first billion numbers, may give us

confidence on the behaviour of the next billion numbers, but cannot offer any

guarantee that they will be equally good. Analytical results fall in the following

categories:

- Basic parameter selection

For most generators not all possible parameters will result in a functional

generator. A typical question is that of gaining the largest possible period

length. For the LCG

this is just a set of simple conditions, for the ICG it involves finding IMP

polynomials [6, 30, 45, 42, 43, 25]. For compound generators to work it

is also necessary to obey certain analytically derived constraints.

- Properties of the resulting PRN

For some generators it is possible to derive statements on some aspects of

the output. The well-known fact that tuples of LCG generated numbers

form lattices (see page ) is one example.

- Estimates and bounds

Especially the discrepancy has been the subject of analytical study. There

are numerous estimates and bounds for various generators.

1.2.6 Efficiency

With all the mathematical discussions about the merits of PRN generated by a new

algorithm one should not forget the fact that we need to actually implement this

algorithm on a real computer. There are a few things which should be noted

here:

- Implementation costs

Implementing (i.e. programming) an algorithm is usually a one-time investment

of effort. Once the code is there, integrating it into a larger project is

more or less trivial. What are the difficulties in implementing a typical

pseudorandom number generation algorithm ? As we will see later in Chapter

5, the main problem lies in the handling of large integers and performing

standard mathematical operations like addition and multiplication on them.

For inversive generators finding the multiplicative inverse in

is a required operation, too.

- Computational costs

The execution of any algorithm requires both CPU and memory resources.

Typical PRNG (EICG, LCG, ICG, …) have only very small memory

requirements. The code is very compact and the state information does only

require a few bytes.

As far as CPU consumption is concerned, a PC with Intel 486DX2-66

processor is capable of executing the EICG algorithm about 70000 times in

a second. The author’s implementation of the LCG runs at about 400000

PRN per second. The highly optimized system pseudorandom number generator

runs at over 700000 calls per second. These numbers are only provided to

give a rough feeling for the speed of the algorithms when using a modulus in

the range of .

- Implications for the overall running time

As the generation of the PRN is usually performed on demand on the same

computer as the stochastic simulation for which they are used, they compete

for the same resources. The total running time for the simulation can be

described as the sum of the time used for the PRN generation plus the

time used for doing the actual calculations. The latter often dominates the

former, thus it does not make sense to try to gain overall speed by sacrificing

quality in the PRN algorithm.

1.2.7 Practical Aspects

After implementing the algorithm one has to find good parameters for that generator,

too. Fortunately, for some common PRNG tables containing suitable parameters have

been published [29, 43, 2, 83], so there is no need to reinvent the wheel there. Other

generators like the EICG are known to be rather insensitive to the choice of the

parameters.

Another aspect is the possibility to generate independent streams of pseudorandom

numbers. Such streams are needed for parallel or vectorized computing. See [55, §8],

[1], [12], and [36] for more information on this topic.

1.3 Important Types of PRN Generators

There is no shortage on proposed pseudorandom number generation algorithms. Every

year new ideas on this topic are published, but only if the resulting PRN have been

subject to intensive theoretical and empirical study the generator might have

a chance to get used in a real world problem. As it is often the case with

competing inventions, an objective technological superiority does not immediately

lead to market domination. Whether the generator is included in standard

programming libraries seems to be much more important than any published results

on the distribution properties of the numbers. A classic example is the now

infamous RANDU generator which was included in IBM’s Fortran library and

features an extremely poor distribution of triples composed of subsequent

numbers.

The following list introduces some of the most commonly used generators as well as

the inversive generators on which we will focus in this thesis. More complete surveys

on the current menagerie of PRNG can be found in [70, 72, 55].

In the following

denotes a positive integer (termed modulus) and

represents the system

of all residues modulo .

With the addition and multiplication modulo

the set

acquires

the algebraic structure of a finite ring. If the context makes it clear that we operate in the

ring

we will omit the trailing “mod”.

1.3.1 The Linear Congruential Generator

Definition 1.1 Let .

The linear congruential generator (abbreviated as “LCG”) with parameters

and

defines a sequence

in

by

and a sequence

of pseudorandom numbers in

by

As the sequence

is defined by a recursion of order one on a finite set it must be periodic. The longest possible

period length is

in the case of

and in the

case of .

The necessary conditions for achieving these period lengths are well known. [70, p.

169]

The LCG is very popular. Its implementation is quite simple, especially if

is chosen as 2 to the power of bits per native word of the computer (e.g.

) which

reduces the modulo operations to just ignoring the overflow. Due to its simplicity and

popularity the LCG has been subjected to intensive analytical and empirical examination.

The quality of the resulting PRN depends very much on the choice of the parameters

and

.

Fortunately, tables containing good parameters have been published, see [29, 27, 53].

The output of a LCG shows a strong intrinsic structure ([65], see also p.

). A number of modifications were proposed to improve the quality of the

generator. One approach is to extend the recursion to higher orders by making

a function of

. Other proposals

modify the function which describes the recursion. As the name says, the LCG uses the linear

function to

calculate

from . If we

replace

by an arbitrary function, we refer to the resulting PRNG as a general first-order

congruential generator [70, p. 177]. In order to guarantee maximal period length, the

function

must be carefully selected. For example, the quadratic congruential method, as

proposed by Knuth in [50, §3.2.2] uses a polynomial of degree 2 as the recursion and a

power of 2 as the modulus. See [70, p. 181f] for the conditions on the parameters and

analytical investigation on the resulting PRN.

1.3.2 Shift-register Generators

Shift-register generators differ from standard linear congruential generators

in two respects. First, they use a higher-order linear recursion of the form

|

| (1.1) |

where is the modulus,

is the order of the

recursion and are

elements of . Second,

instead of just scaling the

to the unity interval to get the pseudorandom numbers, the

are calculated from a block

of consecutive values .

Thus it is no longer necessary to use a large modulus to get a decent resolution of the resulting

PRN. In order to simplify and optimize the implementation of recursion, the common choice of

is the prime 2. On

a -bit computer this

allows the grouping of

steps into one operation.

Two techniques for the transformation of the sequence

into a sequence of

pseudorandom numbers in

are commonly used: The digital multistep method puts

|

| (1.2) |

The Tausworthe generator [81] is a special case of this method.

More popular is the generalized feedback shift-register method

(GSFR) which can take advantage of the above mentioned blocking of

bits if

are

selected suitably:

|

| (1.3) |

If the parameters are carefully selected the period length will in both cases be

.

Shift register pseudorandom numbers have the advantage of a fast generation

algorithm and a period length independent of the limitations of the integers used for

the calculation. See [70, Chapter 9] and [55] for a discussion on the properties of shift

register pseudorandom numbers.

1.3.3 The Inversive Congruential Generator

A promising modification of the LCG was proposed by Eichenauer

and Lehn in [14]. We will only consider the case of a prime modulus

here. It involves the operation of modular inversion in

which we will denote

by an overline ().

|

| (1.4) |

The restriction to prime moduli guarantees the unique existence of an inversive element in

. This definition

implies

for .

Definition 1.2 Let

be a (large) prime and .

The inversive congruential generator (abbreviated as “ICG”) with parameters

and

defines a sequence

in

by

and a sequence

of pseudorandom numbers in

by

Empirical as well as analytical investigations indicate that the output

of an ICG is superior to the output of a LCG in several respects: longer

usable sample sizes [85, 61], less correlations between consecutive numbers

[72].

1.3.4 The Explicit Inversive Congruential Generator

Analytical calculations have led to the following observation: We can describe the generator as a

function mapping

to . This

self-map in

the finite field

can be written as a uniquely defined polynomial

with degree

. If we demand the sequence

to have the maximal

possible period length ,

the polynomial

maps

onto itself and thus must be a permutation polynomial, which is either linear

() or

satisfies

according to [64, Cor. 7.5]. It turns out that the degree

plays an important role in the analytical examination of the generator in a

sense that a higher degree seems to indicate better distribution properties [70,

Theorems 8.2, 8.3] (see p. ). The theorem of Euler-Fermat tells us that evaluating

corresponds to the calculation of the multiplicative inverse. In this spirit, the

definition

of the EICG seems quite natural:

Definition 1.3 (Eichenauer-Herrmann [15]) Let

be a (large) prime and .

The explicit inversive congruential generator (abbreviated as “EICG”) with parameters

and

defines a sequence

in

by

and a sequence

of pseudorandom numbers in

by

As long as this generator

will always have period length .

Once again analytical and empirical investigations have shown that the output of this

generator is superior to that of an LCG. This will be the generator on which we will

focus our attention in this thesis. The other generators mainly serve as a reference

against which the EICG must compete.

1.3.5 EICG Variants

Two variations of the basic explicit inversive congruential generator

have been proposed. Both proposals substitute the prime modulus

with

. In the set

we can

define the modular inversion only for odd integers. This inversion is once again defined by

for all

odd .

Definition 1.4 (Eichenauer-Herrmann and Ickstadt [22]) Let

be a power of 2, and

with

and .

The explicit inversive congruential generator with power of two modulus with

parameters

and

defines a sequence

in

by

and a sequence

of pseudorandom numbers in

by

The conditions on

and guarantee that

the sequence is purely

periodic with period .

While powers of 2 as modulus have certain advantages for the implementation of

the generator, all theoretical investigations [22, 16] on the quality of the

resulting numbers have concluded that this generator is inferior to the original

EICG.

In order to achieve a period length of

,

Eichenauer-Herrmann [17] proposed the following generator:

Definition 1.5 Let

be a power of 2, and

with

and .

The modified explicit inversive congruential with parameters

and

defines a sequence

in

by

and a sequence

of pseudorandom numbers in

by

Although this modification does indeed increase the period length to

, the

theoretically derived properties of the resulting numbers are still inferior to the original

EICG.

1.3.6 Compound Techniques

An interesting meta-generator is the compound method. This is a very

simple and effective way to combine several streams of PRN into one single

sequence with (hopefully) superior properties. It works as follows: For

let

be a

purely periodic sequence of pseudorandom numbers. Then we get the compound sequence

by

If the subsequences are purely periodic with distinct period

, then we

have .

This compound method extends the well-known approach of Wichmann and Hill

[88]. The properties of the resulting sequence has been subject to a number of

publications; we refer to Niederreiter [72, 4.2] for all the references. Generally

speaking, the compound method preserves the basic properties of the underlying

generators.

Chapter 2

The Notion of “Randomness”

Examining what we mean by random numbers will help us to understand the

difficulties in generating pseudo-random numbers and interpreting test results. We will

look at how we all intuitively deal with supposedly random sequences, and touch upon

the mathematical treatment of the subject. Regrettably, we will not be able to

comprehensively cover this topic, thus we will focus on the subject of testing (finite)

sequences of PRN.

2.1 Randomness by Intuition

First of all, we want to take a closer look at the intuitive notion of randomness. For

one, we all intuitively assign probabilities to various events we encounter, from such

mundane things like which side a dropped slice of bread will land on, every-day events

like rainfall, the number of red traffic lights encountered, or friends met in the

bus, to explicitly random events like the outcome of a dice or the weekly

lottery.

But how do we come to the conclusion that one of these events is somehow random

? What are the criteria for that decision ? In some of the example above the decision is

easy as we know about the process which leads to the outcome. Watching the dice

being cast properly is a sure way to convince oneself that the outcome is

indeed truly random. But how do we proceed when we cannot look behind the

scenes, when the sequence of outcomes is the only information we have got

?

The human mind has remarkable capabilities to spot regularities in a sequence of

events. If it fails to notice anything suspicious it will declare the sequence to be

random.

Let’s test this notion on the most widely used source of random

numbers, the dice. A dice is supposed to select one of the numbers

in a fair and

independent fashion each time it is cast. In the following list we will argue on the merits of a few

possible outcomes.

-

{2}

- If we cast the dice just once, each of the possible outcomes are equally likely.

All sequences of length one are thus equally good.

-

{2, 4}

- There is nothing wrong with this sequence, too.

-

{6, 6}

- Casting doublets is not uncommon in the real world, thus they are not

reason enough to doubt the fairness of the dice.

-

{1, 2, 3}

- A sequence of length 3 is too short to arouse any suspicion, too. Such

simple patterns (runs up, runs down, only even numbers, only odd ones,

only primes, …) are actually quite likely to occur.

-

{1, 2, 3, 4, 5, 6, 1}

- With increasing length it is possible that a more clearly

visible pattern emerges within the numbers. Would you accept such a

sequence as generated by a real dice ? As these things still happen every

now and then in real life, the common answer to this question seems to be

“Yes, as long as this doesn’t happen too often.”.

-

{4, 2, 2, 2, 4, 2, 4}

- This sequence will give reason to doubt the fairness of the

dice. Whether the perceived skew is reason enough to outright reject the

sequence as random is a tricky question. After all, a real dice will show such

irregularities from time to time, too.

-

{6, 6, 6, 6, 6, 6, 6, 6}

- Although theoretical a possible outcome of a dice, this

sequence will probably not be accepted as such.

-

{6, 3, 2, 1, 2, 5, 4, 1, 6}

- At the first glance, this sequence looks quite random,

but closer scrutiny shows that it features alternately even and odd numbers.

As the probability for this to happen purely by chance is pretty small, one

gets suspicious about the “randomness” of the sequence.

-

{1,3,4,6,5,3,2,1,1,3,4,6,5,5,4,2,1}

- This one looks inconspicuous, too. But if

you draw the graph of the sequence you will notice a regularity: There are

too many long ascending or descending subsequences. As these long “runs”

should not appear that frequently in random sequences, this one does not

seem to be random.

-

{1,2,5,4,3,6,6,2,4,1,3,5,5,2,3,4,1,6}

- What about this sequence ? Can you

see any regularity in it ? Once again, a casual look will not find

anything suspicious, the distribution of the numbers seems to be balanced,

consecutive numbers do not have any special relation and there is nothing

wrong with the runs, too. Actually, the numbers are balanced way too

good as all six numbers appear before the first one gets repeated. In

other words, the sequence consists of three permutations of {1, …, 6}. If

the equidistribution of the numbers is so perfect, the randomness of the

sequence must be challenged.

-

{3,2,5,2,1,4,4,6,2,3,4,5,2,5,6,3,3,1,5,4}

- This sequence has a hidden regularity,

too. In previous sequences we have looked for correlations between consecutive

numbers. If we generalize this and take a closer look at numbers

steps apart, we have a more versatile tool for finding regularities. It turns out

that

holds for all values of .

Such a “long range” correlation should not happen in random sequences.

Did you see the one big fault in this sequence of would-be random sequences ? We did

not notice it because we looked only at single sequences. Can you find it now

?

Let us summarize the arguments:

- If the sequence has properties we do not expect to be present in random

numbers, we get suspicious.

If we argue about the “randomness” of a given sequence we try to find

reasons for rejecting it as random. There seems to be no way of asserting a

sequence to be random, it is only the absence of arguments to the contrary

that will lead to confidence in the sequence. The proper formulation in

the language of statistics is the following: The null hypothesis is always to

assume the sequence was indeed generated by a random process with well

known statistical properties. As we will see later, it is not possible to reverse

the problem and regard the non-randomness as the null hypothesis.

- Longer sequences are easier to judge.

Short sequences are likely to contain some sort of perceived regularity, thus

it is hard to reject such a sequence based on a suspicious pattern. If the

sequence is long enough to check if the pattern continues to appear in it,

one can try to determine if the pattern is part of some systematic fault or

just coincidence.

- There are a lot of ways a sequence can be suspicious.

Just when we thought we have found a sequence which does not exhibit

the patterns we have found in all the previous faulty ones, it turns out that

there is a different kind of regularity in it. Somehow this is just like the trick

question for the first natural number without any special properties. If such

a number existed, the very fact would make it special, thus there can be no

such number. We almost get the same feeling when we examine sequences

for their non-conspicuousness. As there are so many ways a sequence can

exhibit a pattern, a complete absence of patterns is just as conspicuous as

any weak regularity.

Furthermore, it is worth pondering if there are not so many patterns that

all sequences will exhibit one. We will take a closer mathematical look at

this question later.

- “Perfect randomness” is an oxymoron.

If the “random” sequence exhibits exactly the expected distribution this

will cause suspicion, too. A random sequence is supposed to deviate from

its distribution. The common measure for this is the variation. A sequence

with a perfect distribution will fail to have the same variation a random

sequence is supposed to have.

As the variation can be viewed as just another test statistic, it, too, should

vary in a certain way. From that point of view, a constant and perfect

variation is just as suspicious as a constant and perfect distribution. This

reasoning leads to the demand that not only the distribution of the numbers

should be as wanted, but also that the empirical higher moments should be

close to the values predicted by probability theory.

2.2 Formalizing the Intuitive Notion

Now that we have examined what we intuitively mean by saying “This sequence looks

random.” we can try to formalize this notion and develop a set of properties we want

to check if we have to judge a sequence and its generating algorithm. The goal in this

formalisation is to be able to delegate the testing to computer programs. As computers

are known to be very bad at spotting patterns, it will not be an easy undertaking to

find an algorithm which does as good as the human mind. We can only hope that all

systematic faults in the sequence will eventually cause a suspicious behaviour of the

sequence in a generic test.

In the following we abandon the dice as the example, and turn to uniformly distributed numbers

in the interval .

-

Distribution:

-

The first step in testing a sequence is usually to test its distribution characteristics.

That is, are the numbers equally spread over

?

In order to test the (empirical) distribution one partitions the interval

in sets

and compares the number of hits in each set to the size (measure) of that

interval.

In the discrete case this can be done by simply counting how often each

possible value appears in the sequence. If the counts differ significantly, the

distribution property of the sequence is inadequate.

In order to keep the problem manageable in the case of a huge number of

possible outcomes and in the continuous case, the bins (i.e. the )

used for counting will cover more than one outcome.

The layout of the partition is a crucial part of the test: If the

are simple intervals the test will measure the overall distribution of the

sequence. But the

could be the union of a set of small intervals, in which case the test targets

irregularities in the fine structure of the sequence.

Once we have finished the counting process we need some mathematically

justified criteria for interpreting the difference between the number of hits in

each

and the expected count. There are a number of possible algorithms for this,

the most popular of which are the -test

and the Kolmogorov-Smirnov test (often abbreviated as KS-test). The former

uses a test statistic based on the difference between expected and actual

count in each bin, whereas the latter compares the empirical distribution

function of the counts to the expected one.

-

Correlations:

-

It should be clear that any numbers in a deterministically generated and

thus reproducible sequence are trivially correlated. Therefore it makes no

sense to look for such intricate dependencies like the generation rule in the

sequence. We will restrict our search to much simpler correlations, which

makes additional sense because that will be the only kind of correlations

we can hope to find with the limited capabilities of a computer program.

There are two approaches to this:

Tests for special correlations check if the sequence exhibits a given kind

of regularity. An often used example is the run-test which measures

the frequency of ascending or decreasing parts in the sequences. The

distribution of these runs in random sequences is known, making it possible

to judge the sequence with respect to this type of correlation.

The serial test is a more general way of examining a sequence. It transforms

the problem of testing for correlation to the problem of testing for equidistribution

by looking at tuples composed of elements from the sequence. The size of

each tuple is called the dimension

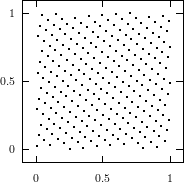

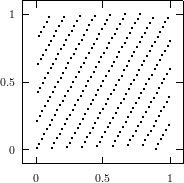

of the test. Common tests use either overlapping tuples defined as ,

or non-overlapping tuples defined as .

If there are no correlations in the original sequence the -tuples

are equidistributed in the unit cube of dimension ,

which can be checked using the techniques outlined above.

To illustrate this, let us examine the sequence

with the serial test of dimension 2.

As you can see, the fact that large and small numbers alternate causes a

significant deviation from the equidistribution of the points.

For a number of generators it is possible to derive analytical

bounds for the deviation from the equidistribution of

-dimensional

tuples as measured by the discrepancy.

If one does not restrict oneself to form tuples out of consecutive numbers, the resulting test

will be able to find more subtle kinds of correlations without resorting to high dimensions

.

While this modification hardly changes the empirical testing, only in the case of

the EICG analytical bounds have been derived for this generalized serial

test.

2.3 Randomness in Mathematical Terms

Now that we have clarified the intuitive understanding of the concept of testing

pseudorandom sequences, we will turn to the mathematical treatment of the subject.

Rather than providing a full scale discussion of the mathematical objects and

formalisms involved, which would exceed the scope of this thesis, we want to present

an introduction targeted at the mathematical layman. Our aim in this section is to

introduce as much of relevant concepts as is necessary to be able to explain the

problems one faces when testing pseudorandom numbers and comparing PRNG. We

refer to [85] for an in-depth discussion.

There is more than one mathematical approach to this topic. The following list

tries to introduce the different viewpoints and gives references for further

reading.

- Number-theoretic approach.

In our context, this branch of mathematics focuses on the equidistribution

of a sequence of numbers.

Various measures for the quality of the equidistribution were developed over

the years, of all these numbers, the discrepancy is the most common.

Theorems on the equidistribution usually deal with infinite sequences, thus

they are not particularly useful in conjecture with finite (or periodic)

sequences. For example, equidistribution of a sequence can be defined in

terms of the discrepancy in the following way:

See [51, 70, 72] for further reading.

- Kolmogorov complexity and information theory.

This approach targets the complexity and information content of the

sequence in question. One of the possible measurements is the minimal

size of a computer a program (or a Turing machine) which can reproduce

the sequence. In the optimal case, the program code will have to explicitly

contain the sequence in order to print it. Any possible shortcuts the program

can use (like exploiting dependencies) will be a measure for the lack of

randomness of the sequence.

Since all our sequences are generated by short programs, they a-priori fail

this test. Thus we will not consider this notion in our tests.

A similar approach is to focus on the amount of information contained in

the sequence. If the entropy is high enough, we will accept the sequence

as a good approximation of random numbers. Another way to express this

notion is to state that the sequence is not compressible.

Testing whether a sequence is compressible is not easy since all common

implementations cannot achieve the theoretically possible compression.

Only really bad PRN can be eliminated with programs like gzip or

compress. Extending the capabilities of these programs (for example

enlarging the range of the pattern search in gzip) might be a way to get a

workable test. As far as we know, nobody has tried this yet.

For larger sequences, the distinction between these ideas start to blur, as

the size of the information needed to transform one representation into the

other becomes irrelevant.

See Lagarias [52], Ming/Vitány [67] and Chaitin [5].

- Cryptographic considerations.

A sequence of PRN can be used to construct a stream cipher. If “true

random” numbers are used, this cipher is called the one-time pad and is

provably secure. So it is natural to ask what properties the PRN must have

to achieve a good level of security.

According to Rueppel [76] there are several approaches to the construction

of a secure stream cipher: The information-theoretic approach considers the

possibility in principle to derive the seed (i.e. the key) from an observation of

the PRN, the system-theoretic approach tries to make breaking the cipher at

least as hard as solving known “hard” problems like factoring or the discrete

logarithm. The complexity-theoretic approach tries to make sure that the

amount of work needed to break the cipher is of non-polynomial complexity,

randomized stream ciphers increase the magnitude of the code-breaker’s

problem by utilizing a public pool of random numbers.

See [77, 76] for a discussion of these ideas.

- Statistical approach.

The basic idea of statistical testing can be summarized as follows: From

a sample of supposedly random numbers a function called test statistic is

calculated. As the distribution of this function is known for the case of

real random numbers (otherwise the test does not make sense), one can

determine which kind of results are extremely unlikely to occur. Typically

this is formulated as intervals in the domain of the test statistic. These

intervals (usually called critical region) are selected in a way that the

probability that real random numbers lead to a test statistic there is smaller

than the level of significance (usually 0.05, 0.01 or 0.005). If now for a

sample of PRNG the test statistic falls into the critical region the common

inference is to reject the sample.

See [50, 4, 54] for further reading.

All common tests rely on the idea of statistical testing. In the following we will try

to elaborate on the motivation behind these tests, their mathematical foundation, their

power and limitations, and how to interpret their results.

2.3.1 Random Variables and Probability

First of all, let us take a closer look at what we want to simulate. Our target are

sequences of random numbers, which are realisations of a sequence of independent,

uniformly distributed random variables.

Random variables (RVs) are one of the main building blocks in probability theory.

They are used to assign each possible outcome (or, to be more exact, each reasonable

set of outcomes) of an experiment a real number which is interpreted as the probability

of this outcome.

But strictly speaking, the mathematical concept of RVs does not explicitly reflect

our intuitive ideas about randomness of events, on the contrary: RVs are just simple,

ordinary functions. One is tempted to ascribe mythical powers to RVs, like the ability

to randomly select one of a set of possible events. This is not true, they only

describe certain aspects of an idealized system which flips the metaphorical

coin.

So where is the link between the mathematical world of RVs and the real

life world of roulette tables ? Unfortunately there is none for single events.

Even if a RV does in fact model a real world event, hardly any conclusions

can be made about the outcome of the next single event. Even such unlikely

events as winning the jackpot in a lottery do happen every now and then,

and most people are not deterred by the extremely bad odds from playing

every week. On the other hand some people are scared of travelling by plane

because the probability of a safe flight is marginally less than one. In both cases

our experience tells us that the probability alone cannot predict the next

outcome.

But even such pretty definite sounding statements like “this event

will occur with probability 1” cannot guarantee the outcome of an event.

More insight into measure theory will tell us why such strange things

can happen. For example, the probability that the next realisation of an

-distributed

random variable will be a rational number is zero. This does not stop the real

world from delivering one of the infinite number of rational numbers, thus

rendering the statement “This experiment will only return irrational numbers”

incorrect.

We have seen that a RV cannot make concrete statements about a single

outcome, so we might ask what statements about outcomes it can make at all.

One way to formulate the meaning of probability is the following: [85, p.

10]

The probability assigned to an event expresses the expected average

rate of occurrences of the event in an unlinked sequence of

experiments.

We need to elaborate on two aspects of this definition as they are not as strict and

unambiguous as commonly demanded from a good definition.

First, what do we mean by “expected” ? That seems to indicate that

probability cannot be an intrinsic property on an event. There is no

mathematically satisfying way to assign a probability to an event based on a

(finite)

set of measurements, as it is extremely unlikely that another set of experiments will

result in the same value. The common way out is to make assumptions about some

parts of the experiment, like the Laplace assumption which assigns the same

probability to all underlying events. These assumptions are based on a mental model

of that event which includes a theory on how often something should occur. It is the

mathematician, the physicist or just some observer who forms a mental model based

on experiences or consideration. Such simplifying mental models of the real world are

ubiquitous as they provide an essential simplification in the way we view the world.

Other such simplifications include the concept of rigid bodies, fluids, or gases which

are abstractions of “a bunch of molecules tied together by various forces”. Just as the

laws of leverage rely in their formulation on the concept of forces and of rigid

bodies the laws of chance depend on the concept of probability assigned to

events.

The other critical word in the above definition is “unlinked”. By unliked we mean

that the outcome of one experiment does not influence the outcome of any subsequent

experiment. Common examples for unlinked experiments include drawing balls

from an urn (with putting them back in !), casting a dice, or the roulette

wheel. Please note that in all these examples there is a connection between

two successive experiments as the first one does influences the second. It is a

conscious decision by the observer that the re-shuffling of the balls in the urn

caused by the first experiment does not affect the probability in the second one.

This sounds almost like a paradox, as the re-shuffling surely does effect the

outcome. But remember, just above we noted that the probability of an event

does not determine the next outcome at all, so there is not contradiction

here.

We have to be careful with sequences of PRN and their relation to independent

random variables, too. The concept of independence is based on the concept

of distributions. As we cannot ascribe distributions to numbers, we cannot

use the term “independence” for sequences of PRN. We will use the word

correlations to refer to any unwanted relationship between elements in the

sequence.

2.3.2 Testing

As described above, the theory of random variables and probability tries to model

aspects of the physical world. The fundamental principles of science demand

justification in form of experiments for all such theories. For typical physical models

such experiments are usually easy to set up and follow the same scheme of comparing

an expected (calculated) result to the measurements of the actual physical event. If

they differ more than inaccuracies in the measurements would allow, the theory is

proven to be wrong. Philosophy of Science tells us that it is impossible to positively

prove a theory.

Do the same principles hold for conjectures in the field of probability, too ?

Unfortunately, they do not. Let us illustrate this with an example:

As a theory to test we might take the assumption that a given coin

is fair, meaning that the probability it lands with the heads side up is

. How

might an experiment designed to test this hypothesis look like ? Surely it will involve

throwing the coin a number of times and then comparing the result to the prediction.

Calculating the prediction based on the theory is simple, unfortunately the

prediction assigns each possible outcome a positive probability. Thus regardless

of the behaviour of the coin the result is consistent with the theory, as we

cannot rule out the measured result. If we have no way to reject a theory,

we have to find a different set of criteria according to which we can justify

theories.

The common way out is statistical testing. It should be clear that statistical

testing can never be as strict as testing in other areas. It is a heuristic approach to the

problem. As such, it relies on the good judgement of the tester and is not

objective. But before we elaborate on the shortcomings of statistical testing let us

summarize the basic procedure again, already using the test for randomness as the

example.

- The first step is to formulate the hypotheses. In our case the null hypothesis

states that the source of our pseudorandom numbers can be modeled as a

RV with distribution .

The alternative hypothesis

states that

is not true.

- We define a function called test statistic which maps the result of an

experiment into some mathematical domain. Typical test statistics for testing

uniform PRN are the number of runs, the discrepancy, the -statistic,

…. See [50, 54, 70, 66] for further test statistics.

- A level of significance

is selected which defines how strict the test will be and how much leeway we

will accept for the PRN. Common values for

are

or .

- Using the (hopefully) known distribution of the test statistic for the case of

being true, we determine the critical region

which is the area in the domain of the test statistic which covers its extreme,

unlikely values. The value for

is used to quantify what we mean by “extreme” and “unlikely” in such a way

that .

- Now the pseudorandom numbers are generated and are used to to calculate

the test statistic. If the value for the test statistic falls into

we say that the PRN have failed the test and we have reason to believe that

is true, otherwise the test is passed and we have no reason to reject .

This is the basic outline of all common empirical tests. We will discuss a few tests

and their results later in this thesis. So what are the weak spots in this method of

testing pseudorandom numbers ?

- PRN are not experiments.

First of all, when testing sequences of numbers generated by a PRNG basic

premises of statistical testing are violated.

The theory behind statistical testing assumes that we actually deal with

random events. It is thus a circular argument to conclude from the result

of such a test that the numbers in question are “random”. Only their

statistical properties that are subject to the test, not the basic premise that

the concept of random variables is a valid model for that experiment.

It is therefore not correct to speak of “statistical testing” with respect to

PRN testing. A more appropriate term is “numerical testing” as the

test examines only a numerical property of a fixed set of numbers.

The only “statistical” part of the test is the calculation what numerical

values for the test statistic are considered to be good and which are

considered to be bad.

- The test statistic is arbitrary.

The test statistic determines which aspect of the numbers we want to test.

Dividing

into the intervals

for ,

counting the hits in each interval, and then calculating the

statistic is a straight-forward test statistic which aims the the overall equidistribution

of the pseudorandom numbers in .

The choice of the bins (in this case intervals) seems to be a natural one.

But what bins should we use to measure the finer aspects of equidistribution ?

We could use just a large value for

and keep the intervals, but that would cause a problem with the validity of

the

approximation as the number of hits per bins decreases. An other option is

to use something like this: Define bin

as

for some suitable values for

and .

Then the bins are no longer simple intervals, but sets of intervals that are

spread over the unity interval. The value for

determines the width of each component interval.

Is there a natural choice for

? We do not think so. But the choice can be important as the result of

the test depends on it. Consider for example the set of numbers defined by

which is perfectly equidistributed in.

For certain relations between ,

and ,

like ,

the test will result in extremely bad

statistics. As an example, consider the case

where all numbers

fall into the same bin. For other values of ,

this set of PRN will exhibit no weakness in this test.

We have seen that even such simple changes to the test statistic, like

modifying the width of stripes, can completely change the result of the test.

One can imagine that completely different layouts of bins will lead to a

great variance in the test results, too. Thus we have to keep in mind that

the choice of the test statistic, and thus to some extent the result of the

test, is arbitrary.

One consequence of this fact is that we cannot declare one sequence of

PRN to be better than a second one just because we found a test where

the first one rates better, as a slightly modified test might produce exactly

the opposite result.

- Good PRN have to fail some tests.

What we strive for are numbers who behave in most respects like realisations

of -distributed

RVs, so it is a natural question how such ideal random numbers will perform

in our statistical test. The answer to this is quite simple: If we conduct a test

at the significance level

then a sequence of random numbers will fail the test with probability

.

If we have set

then we can expect a failure about once every hundred tries.

As PRN should model all aspects of real random numbers, they should fail

statistical tests at about the same rate.

It is therefore not advisable to outright reject a sequence of

PRN based on its failure in single tests.

- The variance of the test statistic can be important, too.

Classic statistical tests examine if the test statistic does not deviate from its

expected value too much. If we are only interested in the expected outcome of a

similar simulation problem, such one-level statistical tests are all we need in

order to be confident about the accuracy of the simulation.

On the other hand we might be interested in the distribution of the simulation’s

outcome. For this goal hitting the expected value is not enough, the variance of

the result is now important, too. Thus we will demand the same behaviour from

the test statistic, too.

Let us illustrate this principle with an example. We want to test the well known

strategy of doubling the ante in a game of roulette. It is supposed to guarantee

winning the initial ante and works like this: If we do not win in the

first round (and therefore win twice the ante) the ante is doubled for

the next round. If this round is won, we get back four times the initial

ante while we invested three times the initial ante resulting in a net

win of one ante. In case of bad luck we double the ante again hoping

for eight times the ante for an investment of seven. As we hope that

we will finally win before our capital is drained a net win seems to be

certain.

In order to simulate this we need random numbers to determine

whether we will win the current bet. The probabilities are

for winning

and

for losing each round, respectively. It seems to be natural to use the lengths

of runs as a test statistic to test our source of PRN for its fitness to

simulate a real roulette table. The probability that the maximal run

length in 500 tries is greater than 15, is smaller than all usual values for

, so

according to the corresponding statistical test we should reject all sequences where such runs

do occur.

When we now run the simulation with these prescreened sequences we will never

ever experience a loss as long as we have enough money for 15 steps of

doubling the ante. Thus we should conclude that the strategy works. As

we know, this is not true. So what went wrong with our simulation

?

The statistical test considered it equally important whether the

sequence in question was “well-behaved” or not, whereas the

simulation assigned completely different weights to those cases. Thus

the area that the test considered to be insignificant (smaller than

)

played a major role in the simulation (more than

).

There are some other cases of simulations where we are not so much

interested in the average case, but in the extreme ones. Consider for example

all those safety measures in power plants or other machinery where a

rare sequence of occurrences might lead to catastrophic results. When

simulating these security systems one must not a priori exclude unusual

sequences.

Please note that the distinction between level-1 and level-2 tests (tests which test

the distribution of the results of a level-1 test) is arbitrary. The test statistic of

a level-2 test is just another function of the underlying set of PRN,

too.

- Statistical tests cannot be used to objectively rate PRN.

One might be tempted to use a set of statistical test to once and for all declare

one generator superior to another generator. Intuitively this makes sense,

especially when comparing two generators of the same type. A common use for

this heuristic is the selection of optimal parameters for the LCG based on its

lattice structure [29, 73].

But is this judgement mathematically justified ? Leeb explains in [59] that such

judgement is not justified as all possible sequences of PRN of a given finite

length pass exactly the same number of statistical tests.

In order be able to use statistical tests as a criteria for the selection of

PRNG the user has to declare which properties he considers important. With

this knowledge it is possible to weight the tests and therefore select a suitable

generator for this specific application.

- Statistical tests are simulations with a known result.

Both statistical tests and simulations use a set of PRN to perform a more or less

elaborate calculation.

In a statistical test we draw a conclusion from the right side to left: Based on

the difference between expected and calculated value we judge the quality of the

PRN.

A simulation operates the other way round. Based on the (hopefully) known

quality of the PRN we hope that the simulation result is correct.

While generic statistical tests can be used for this reasoning, one can increase

their value by designing the tests to closely model the simulation. Thus the tests

can target exactly those properties in the PRN which will be significant in their

application.

2.3.3 Interpreting Test Results

In order to conclude this chapter on the notion of randomness let us recapitulate what

we know about testing a generator, and how we should proceed when we face the task

of selecting a generator for a particular simulation problem.

- Statistical testing cannot guarantee that the tested generator will perform

equally well in a real world simulation. Only if we are able to determine

which aspects of randomness are important for the simulation and

specifically test our source of pseudorandom numbers for these aspects, we

can mathematically justify our confidence in the validity of the simulation

[59].

- Empirical testing should be supplemented by analytical investigations

into the quality of the generator. Such calculations are often essential to

guarantee basic properties like period length, as well as to provide means

to select suitable parameters to avoid pitfalls like a degenerated lattice in

the case of linear generators.

Furthermore, analytical investigations can yield some insight in the overall

structure of a generator’s output which can be compared to the properties

required in the simulation. The lattice structure of the LCG might be

actually useful in Quasi-Monte Carlo integration whereas it can be harmful

in geometric problems, e.g. the nearest-pair test [14, 54].

- Level-one tests target only the expected value of the test statistic. Often

this is not enough, making it necessary to test the distribution of the test

statistic. Such two-level tests guarantee that the proper irregularities are

present in the PRN, too. Whether we should strive for such variance in the

generator is up to its final application.

- Rejecting a generator is rejecting one facet of randomness. It is

self-deception to claim that one rejects a generator based on its lack of

randomness. One can only state that one does not want that particular

aspect of randomness. Sequences we will intuitively classify as non-random

may be important as input for the simulation in order to get correct results.

- As we have seen before, the results of a test can be extremely sensitive to its

parameters. Thus statements like “generator x passes the y-test” have little

relevance unless the exact parameters used in the test and in the generator

are published, too.

- The result of a single test is not enough to assess the quality of generator.

Only a battery of tests and comparisons to the performance of other

generators in the same test suite enable the mathematician to pass

judgement on a generator.

Chapter 3

Theoretical Results

In this chapter we will discuss analytically derived properties of the explicit inversive

congruential generator (EICG). We will use results obtained for the LCG to serve as a

reference as the LCG is the most commonly used generator. Let us start by repeating

the definition of the EICG:

Let be a (large)

prime and .

The explicit inversive congruential generator (abbreviated as “EICG”) with

parameters

and defines

a sequence

in by

|

| (3.1) |

and a sequence of

pseudorandom numbers in

by

Please remember that we perform all calculations except the final scaling in the finite

field .

will denote the

non-zero elements of ,

that is . The over-line

denotes the multiplicative

inversion in for all

non-zero elements .

With the special case

added, is a bijective

function from

onto . Furthermore,

we have

and for

all .

The latter identity is due to Fermat’s Little Theorem.

Note that from the explicit definition of the sequence

we

can easily derive a recursive description:

In order to achieve maximal period length

, the parameters

,,

and can be freely

chosen from as long

as is prime and

. To see this, consider the

function which is composed

of bijective functions in and

thus is bijective, too. As

in we have

for all

, thus the sequence

is purely periodic

with period length .

We will only consider full period generators, that is

.

We will write

to denote the output of a particular instance of the EICG method. Unlike Leeb [59, p.

89] we mean the whole infinite (but periodic) sequence, and not just the first

numbers. This way, no special treatment of wrap-arounds is needed when considering

subsequences.

3.1 Relations between different EICG

The choice of parameters is simple for an EICG, but not all choices will lead to

completely different pseudorandom numbers. In this section we will examine the

relations between EICGs with the same modulus, but different parameters

,

, and

.

These results are helpful for the implementation, as one can eliminate an addition

modulo ,

as well as to the theoretical investigation as they provide a very elegant way to

describe sub-streams. We will elaborate on this idea which is due to Niederreiter [71, p.

5] later on.

First of all, let us make a rather trivial observation on the role of the parameter

.

Observation 3.1 Let .

Then we can write the sequence

as .

In other words, the second sequence is first one shifted by .

Proof: This relation follows from the fact that

and

appear only

as their sum

in the definition of the EICG. __

The following observation is taken from Leeb [59, p. 89]; it states that one of the

parameters is redundant.

Observation 3.2 Let

be prime and be fixed.

Then we have for all

and

|

| (3.5) |

Proof: We base the proof on the recursive definition of the EICG. As the recursion does only

depend on ,

which is constant, it is sufficient to show that the

of

these generators are equal. In the first two cases we have

and the third equality translates to

_

The third equality can be used to rewrite any EICG as an EICG with

, but a different

value for .

Thus the generating formula can always be rewritten as

which saves one addition. The addition

can be implemented by simply incrementing the previous value modulo

, thus

we need to perform only one increment, one multiplication, one inversion, and one

division to generate the next pseudorandom number.

There is an obvious connection between

and

,

too:

Observation 3.3 Let

be prime, ,

and .

The sequence

can be obtained by selecting every -th

element from the sequence .

Proof: The sequence generated by taking every

-th element in the

sequence can

be written as .

We have

and thus

where .

_

These three observations give us the tools to show that all

maximal period EICGs can be derived from the “mother-EICG”

in the

following way:

- Start with the sequence .

- Shift the sequence by .

The result is .

- Take every th

element of that sequence. According to Observation 3.3 we get .

- According to Observation 3.2 this sequence is the same as .

Can these insights help us in the theoretical investigation on how samples from

an EICG behave under various tests ? Yes, they provide a very convenient

and elegant formalism to describe subsequences and various kinds of

-tuples

generated from the stream of pseudorandom numbers. With this formalism, the proofs

of discrepancy estimates and non-linear properties are very concise.

First of all, we do not need to bother with the parameter

in

the theoretical investigation as we can always rewrite the EICG to one with

.

Second, any property of a sequence of EICG numbers, which is valid independently

of the parameters used, is immediately valid for subsequences consisting of every

-th

element. One direct consequence of this is, that once we can prove that pairs of

consecutive numbers are uncorrelated for all valid parameters, we can rule out the

possibility of long-range correlations at critical distances. See [10, 20] for a discussion

of such problems inherent to the LCG.

The third gain, due to Niederreiter [71], is to be able to write almost arbitrary

-tuples

formed out of the stream of EICG numbers as parallel streams. Such

-tuples

as usually used to examine the correlation between successive numbers. For example,

the overlapping serial test (see page ) tests the equidistribution of the vectors

|

| (3.6) |

for in order to

test the PRN

for correlations. If we pick the first coordinate of each vector we get the original sequence.

Picking always the second results in the original sequence shifted by one. According to

the above equivalences we can write this shifted sequence as an EICG with the same

parameter ,

, and a

different .

Thus we have

|

| (3.7) |

where is the sequence

generated by the EICG .

The obvious generalisation is to allow almost arbitrary

EICGs

for each coordinate.

In the following, we will prove all statements on the behaviour of

-tuples in

terms of these parallel streams. For that, we will need to restrict the possible values for

the and

in order to avoid certain

special cases like .

As we will see later in the various proofs, we need the condition

for all

. Thus

we have the following definition:

Definition 3.1 (Parallel Streams) Let

be prime,

, and

,

such

that

are distinct. Then we put

|

| (3.8) |

and define a sequence

in the -dimensional

affine space over

by putting

An interesting special case of parallel streams, which is more general than the overlapping

-tuples

considered above, can be obtained as follows. Choose an integer

with

and

integers

and put

where the are as in (3.1).

This sequence of points in

can be written in terms of parallel streams according to Definition 3.1 by putting

and

for

. It is easy to

show that the

are distinct, thus all results concerning parallel streams are valid for this general method of

composing -tuples,

too.

The non-overlapping tuples

are covered by the concept of parallel streams, too. To see this, set

and

for

.

3.2 Structural Properties

The best known structural property of any pseudorandom number generator is the lattice

structure of the LCG. Coveyou/MacPherson [9] and Marsaglia [65] noted first that

-tuples

formed from successive LCG-numbers form a lattice in the

-dimensional

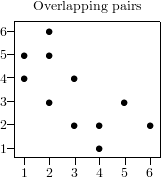

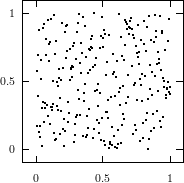

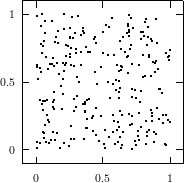

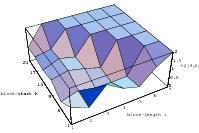

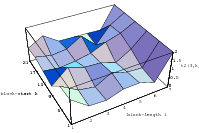

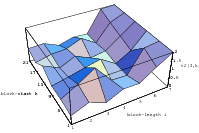

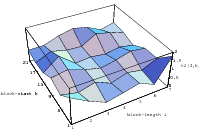

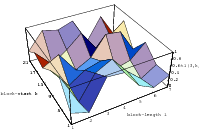

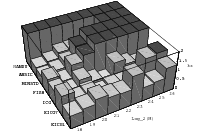

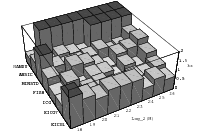

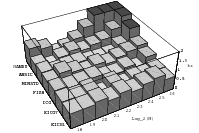

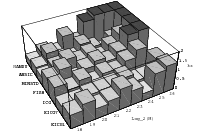

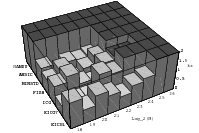

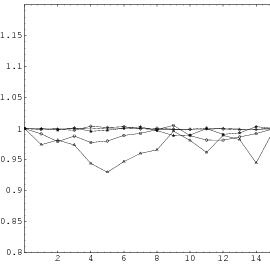

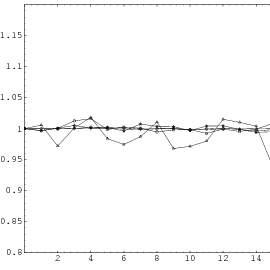

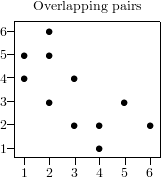

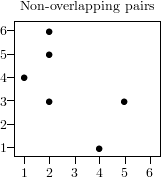

cube. Figure 3.1 depicts the lattice formed by plotting overlapping 2-tuples

for the full period of two “toy” generators. “Production quality” generators

exhibit the same structure, you just have to zoom into the image to see the

pattern.

The shape of the lattice depends very strongly on the parameters

and

of the

LCG. Thus various measurements on the coarseness of the lattice are used to select suitable

parameters

and .

That way, a weakness of the LCG turns into a strength, as one can guarantee a

well-behaved lattice for low dimensions as long as the parameters are chosen well

enough.

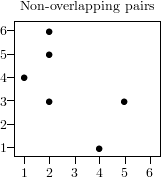

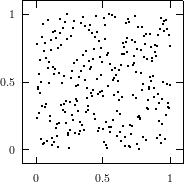

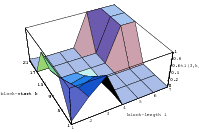

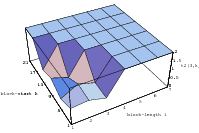

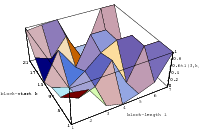

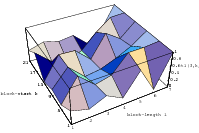

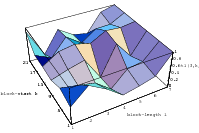

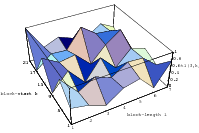

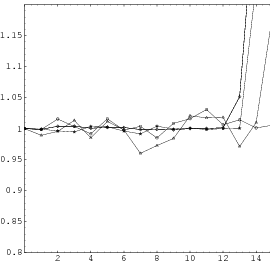

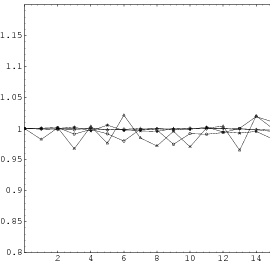

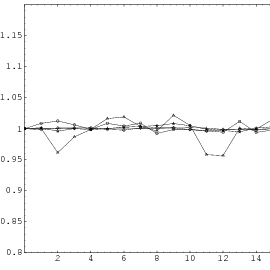

Does the EICG exhibit a similar structure ? Figure 3.2 suggests that the

EICG does not possess this linear property, although one can see some other

regularities. In fact, one can prove a very stringent non-linearity property for

-tuples

taken from an EICG. The theorem describing this is due to Niederreiter [71].

Theorem 3.1 Let

as in Definition 3.1, then every hyperplane in

contains at most

of the points ,

,

with .

If the hyperplane passes through the origin of ,

then it contains at most

of these points .

Proof: All calculations in this proof are performed in the finite field

.

Furthermore, remember that according to Definition 3.1, the

are

distinct.

A hyperplane

in is uniquely

defined by a vector

and a scalar as

. We restrict our search for

points on a hyperplane .

Thus for we have

according to (3.8) ,

therefore we can rewrite the hyperplane equation for

as

By clearing denominators, we see that

is a root of the polynomial

If ,

then is a

nonzero polynomial

of degree over

. Since such a

polynomial has at most

roots in , the

hyperplane

contains at most

of the

with .

If ,

that is ,

we get

whose degree is at most .

It remains to show that is

not the zero polynomial. As

is not the zero vector, one of its coordinates is nonzero. For

we

have

because is the chosen

nonzero coordinate, and the

as well as the

are nonzero according to the conditions of the theorem. As we have found

for

some ,

cannot be the zero polynomial. __

This theorem proves that -tuples

taken from an EICG do not form any linear structure such as a lattice. But that

does not mean that no other kind of pattern emerges in plots of pairs of

consecutive